- Pro

The boundaries between HPC and AI workloads becoming increasingly blurred

Comments (0) ()When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Free)

(Image credit: Free)

The modern data center is undergoing a significant transformation driven by increasing complexity and widespread integration of artificial intelligence (AI).

This change is characterized by the convergence of high-performance computing (HPC) and AI workloads, which is driving innovation at every layer of the infrastructure from chip design to cooling systems.

Vik MalyalaSocial Links NavigationManaging Director & President for EMEA at Supermicro.

The size of today's deep learning models, especially large language models (LLMs) and foundation models, requires computational resources previously used for advanced scientific supercomputing. This is pushing AI into the HPC domain in terms of infrastructure.

You may like-

AI infrastructure at a crossroads: why holistic data center design can’t wait

AI infrastructure at a crossroads: why holistic data center design can’t wait

-

The success of AI depends on storage availability

The success of AI depends on storage availability

-

From infrastructure to intelligence: elevating data as a strategic advantage

From infrastructure to intelligence: elevating data as a strategic advantage

Rethinking compute and architecture

This convergence represents a significant shift in how data center designers use and configure processors. In the past, data centers mainly depended on general-purpose, multi-core central processing units (CPUs) to perform many tasks.

While CPUs are still vital for some sequential or low priority tasks, the parallel nature of modern AI algorithms, such as neural network training, requires specialized hardware. As a result, more advanced graphics processing units (GPUs) have become the preferred hardware for AI workloads.

The growing complexity of AI models, which can involve billions or even trillions of parameters, requires high-performance parallel processing on an unprecedented scale.

This has fundamentally changed data center architecture, accelerating the adoption of multi-GPU systems and advanced accelerators. Simply installing many GPUs in a rack isn’t enough; they must communicate with each other seamlessly and quickly.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.This has led to the development of advanced interconnect technologies, such as high-speed InfiniBand, as well as specialized Ethernet fabrics, which provide low-latency, high-bandwidth communication pathways essential for efficient collective operations during distributed training.

The performance of these interconnects often determines the overall scaling and training time for large AI models.

The new infrastructure considerations: Power, storage, and cooling

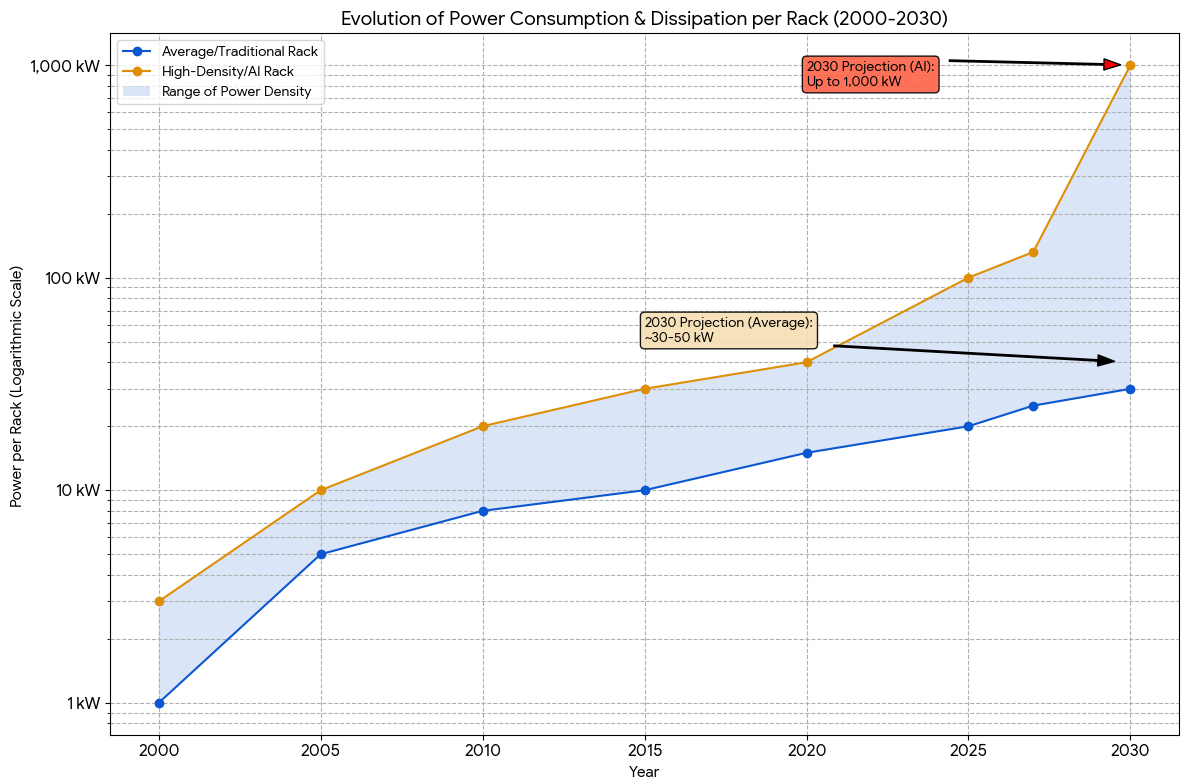

The shift to high-density GPU clusters has introduced significant engineering challenges, especially in power and thermal management. The compute and power density in AI/HPC racks far exceed those of traditional enterprise racks, leading to higher rack-level power demands.

You may like-

AI infrastructure at a crossroads: why holistic data center design can’t wait

AI infrastructure at a crossroads: why holistic data center design can’t wait

-

The success of AI depends on storage availability

The success of AI depends on storage availability

-

From infrastructure to intelligence: elevating data as a strategic advantage

From infrastructure to intelligence: elevating data as a strategic advantage

This has prompted data center designers to reevaluate power distribution units (PDUs) and uninterruptible power supplies (UPS), focusing on higher-voltage, more efficient power-delivery systems.

Many varied demands of AI workloads require tailored infrastructure. Training large models involves supplying massive, often terabyte-scale, datasets to accelerators at very high speeds to prevent GPU starvation.

This results in the use of various high-performance storage options, such as parallel file systems supported by flash storage (NVMe solid state drives). This approach ensures that the input-output (I/O) subsystem does not become a bottleneck, thereby maximizing the efficiency of expensive computing resources.

An essential component of this convergence is the issue of how to cool the systems. Air cooling struggles to remove the massive heat generated by modern high-TDP (thermal design power) accelerators.

Technologies such as direct-to-chip liquid cooling and immersion cooling are transitioning from HPC applications to mainstream AI data center use, offering improved energy efficiency and enabling much higher rack densities.

Scalability, modularity, and future-proofing

Organizations are quickly expanding their AI initiatives to boost productivity and operational efficiency. To support this continuous growth, infrastructure investments need to be adaptable and future-proof leading to an increased preference for flexible and modular server designs.

These systems enable businesses to manage energy use and space more cost-effectively. Since AI workloads constantly evolve, the ability to easily upgrade or expand compute and storage components, without costly infrastructure overhauls, provides a vital competitive edge and helps lower the total cost of ownership.

Many of the new technologies needed by an AI data center originally came from the HPC field. IT managers responsible for installation must look beyond the performance of individual servers and view the entire system as a single, parallel machine, where all components work together.

This involves carefully analyzing network topology to reduce latency and increase bisection bandwidth, and examining storage components to ensure they handle the I/O demands of the accelerators.

By aligning their current capabilities, incorporating necessary third-party infrastructure where proprietary solutions don’t work, and customizing infrastructure design to match the expected nature of future AI workloads, businesses can achieve an optimal balance.

This strategic alignment is essential for leveraging the power of HPC-AI convergence, allowing organizations to expand their innovation without incurring excessive costs.

Summary

A new type of data center is emerging, designed explicitly for AI workloads. Many of the optimizations developed over the years for HPC data centers are now being applied to AI data centers.

Although some hardware components may differ depending on the workload, there are valuable lessons to learn and experiences to be transferred.

We've featured the best data visualization tool.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro

TOPICS AI Vik MalyalaSocial Links NavigationManaging Director & President for EMEA at Supermicro.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more AI infrastructure at a crossroads: why holistic data center design can’t wait

AI infrastructure at a crossroads: why holistic data center design can’t wait

The success of AI depends on storage availability

The success of AI depends on storage availability

From infrastructure to intelligence: elevating data as a strategic advantage

From infrastructure to intelligence: elevating data as a strategic advantage

This graph alone shows how global AI power consumption is getting out of hand very quickly - and it's not just about hyperscalers or OpenAI

This graph alone shows how global AI power consumption is getting out of hand very quickly - and it's not just about hyperscalers or OpenAI

Infrastructure modernization is key to AI success

Infrastructure modernization is key to AI success

How data centers can balance growth with environmental responsibility

Latest in Pro

How data centers can balance growth with environmental responsibility

Latest in Pro

ICO levies £1.2 million fine against LastPass — data breach compromised info on 1.6 million users

ICO levies £1.2 million fine against LastPass — data breach compromised info on 1.6 million users

Boulies OP180 office chair review

Boulies OP180 office chair review

Nvidia develops new software to help track chips following smuggling discovery

Nvidia develops new software to help track chips following smuggling discovery

Hackers distribute thousands of phishing attacks through Mimecast's secure-link feature

Hackers distribute thousands of phishing attacks through Mimecast's secure-link feature

Quantifying the hidden costs of cloud sovereignty gaps

Quantifying the hidden costs of cloud sovereignty gaps

16TB of corporate intelligence data exposed in one of the largest lead-generation dataset leaks

Latest in Opinion

16TB of corporate intelligence data exposed in one of the largest lead-generation dataset leaks

Latest in Opinion

Disney and OpenAI are set to open the vault to Sora — yet an AI Mickey feels like magic lost

Disney and OpenAI are set to open the vault to Sora — yet an AI Mickey feels like magic lost

Operation Bluebird is an ambitious plan to revive Twitter – can it succeed?

Operation Bluebird is an ambitious plan to revive Twitter – can it succeed?

HPC and AI converging infrastructures

HPC and AI converging infrastructures

Corsair adds new touchscreen-enabled entry to its Frame case series — and I can't understand why

Corsair adds new touchscreen-enabled entry to its Frame case series — and I can't understand why

Microsoft finally realizes the threat SteamOS poses — but its promises to fix Windows 11 for PC gaming are too little, too late

Microsoft finally realizes the threat SteamOS poses — but its promises to fix Windows 11 for PC gaming are too little, too late

Should I abandon my iPhone for a return to Android? Tell me what to do

LATEST ARTICLES

Should I abandon my iPhone for a return to Android? Tell me what to do

LATEST ARTICLES- 1Switzerland will revise proposed law change after backlash from tech industry

- 2Disney and OpenAI are set to open the vault to Sora — yet an AI Mickey feels like magic lost

- 3Nvidia develops new software to help track chips following smuggling discovery

- 4Goodbye June review: New Netflix movie is a near flawless directorial debut for Kate Winslet — but you’ll cry your eyes out

- 5Mullvad retires OpenVPN support on desktop, pushing all users to WireGuard